Centripetal City

The myth of the network

Kazys Varnelis

On 19 July 2001, a train shipping hydrochloric acid, computer paper, wood-pulp bales and other items from North Carolina to New Jersey derails in a tunnel under downtown Baltimore. Later estimated to have reached 1,500 degrees, the ensuing fire is hot enough to make the boxcars glow. A toxic cloud forces the evacuation of several city blocks. By its second day, the blaze melts a pipe containing fiber-optic lines laid along the railroad right-of-way, disrupting telecommunications traffic on a critical New York–Miami axis. Cell phones in suburban Maryland fail. The New York–based Hearst Corporation loses its email and the ability to update its web pages. Worldcom, PSINet, and Abovenet report problems. Slowdowns are seen as far away as Atlanta, Seattle, and Los Angeles, and the American embassy in Lusaka, Zambia loses all contact with Washington.

The explosive growth and diversification of telecommunications in the last three decades have transformed how we exchange information. With old divisions undone, email, telephone, video, sound, and computer data are reduced to their constituent bits and flow over the same networks. Both anarchistic hackers and new-economy boosters proclaim the Internet to be a new kind of space, an electronic parallel universe removed from the physical world. It is tempting, when our telecommunication systems function properly, to get caught up in the rhetoric of libertarians like George Gilder and Alvin Toffler, who praise cyberspace as a leveler of hierarchies and a natural poison to bureaucracies, or to listen to post-Communist radicals as they declare social, digital, and economic frameworks obsolete, and profess their faith in Deleuzean “rhizomatic” networks—multidirectional, highly interconnected meshworks like those created by the roots of plants. It is easy, on a normal day, to believe that the Net exists only as an ether, devoid of corporeal substance. But this vision is at odds with the reality of 19 July 2001. When the physical world intrudes, we confront the fact that modern telecommunications systems are far from rhizomatic, and act instead as centralized products of a long historical evolution. The utopian vision of a network without hierarchies is an illusion—an attractive theory that has never been implemented except as ideology.

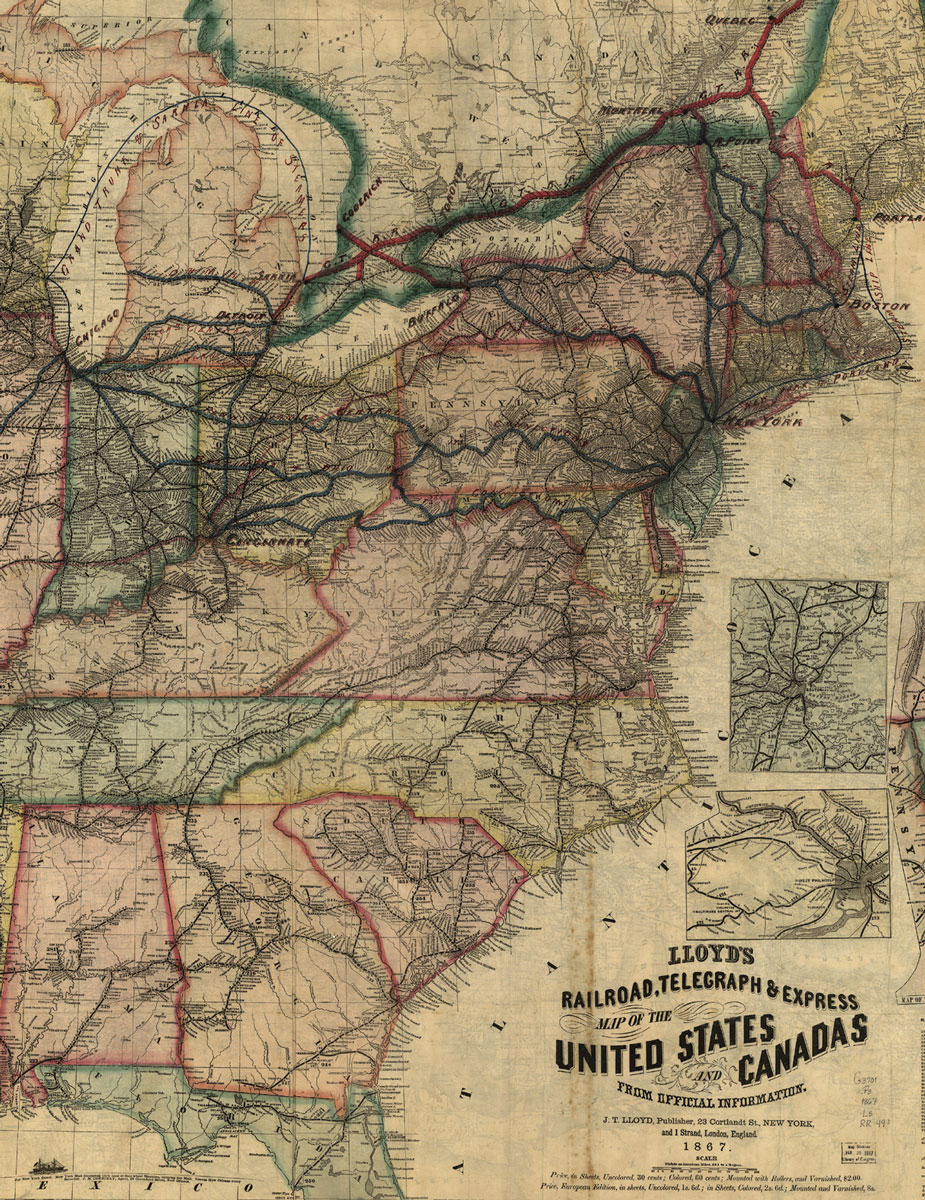

If telecommunications disperses individuals, it concentrates structures, reinforcing the fundamental simultaneity of centrifugal forces that drive capital and the modern city. This is nothing new: downtown has always been dependent on both suburbs and rural territories. The remarkable density of the nineteenth-century urban center could develop only when homes and factories were removed from the city core via the spatially dispersive technologies of the commuter railroad and the telephone, while industrial, urban capital required the railroad and steamship to facilitate exploitation of the American continent and connection to global markets. As the Baltimore train wreck demonstrates, new infrastructures do not so much supersede old ones as ride on top of them, forming physical and organizational palimpsests—telephone lines follow railway lines, and over time these pathways have not been diffused, but rather etched more deeply into the urban landscape.

Modern telecommunications emerged in the mid-nineteenth century. The optical telegraph, invented in the 1790s and based on semaphore signaling systems, had by the 1830s formed a network across Europe, allowing messages to be transmitted from Paris to Amsterdam and from Brest to Venice. In 1850, at its peak, the French system alone included at least 534 stations, and covered some three thousand miles. But optical telegraphs were hampered by bad weather, and the expense of manning the closely spaced stations largely limited them to serving as early warning systems for military invasion.[1] It took American artist Samuel F. B. Morse’s invention of the electric telegraph in 1837 to make possible an economical system of telecommunications. Initially Morse’s simple system of dashes, dots, and silences was received with skepticism. Only with the opening of a line between New York and Philadelphia in 1846 did the telegraph take off.[2] By 1850, the United States was home to twelve thousand miles of telegraph operated by twenty different companies. By 1861, a transcontinental line was established, anticipating the first transcontinental railroad by eight years and shuttering the nineteen-month-old Pony Express with its ten-day coast-to-coast relay system. In 1866, a transatlantic cable was completed, and Europe’s optical telegraph declined swiftly, leaving only a scattering of “telegraph hills” as traces on the landscape.

The electric telegraph’s heyday, however, was also short; invention of the telephone by Alexander Graham Bell in 1876 gave individuals access to a network previously limited to telegraph operators in their offices. By 1880, thirty thousand phones were connected nationwide, and by the end of the century there were some two million phones worldwide, with one in every ten American homes. Still, the telegraph did not merely fade away. It retained its popularity among businessmen who preferred its written record and continued to dominate intercity traffic for decades to come.[3]

At the turn of the century, telephone and telegraph teamed with the railroad to simultaneously densify and disperse the American urban landscape. Making possible requests for the rail delivery of goods over large distances, telecommunications stimulated a burst of sales and productivity nationwide. This produced a corollary growth in paperwork, which, in turn demanded new infrastructures, both architectural and human. Vertical files and vertical office buildings proliferated as sites of storage and production and a new, scientifically oriented manager class emerged.[4] In 1860, the US census listed some 750,000 people in various professional service positions; by 1890, the number ballooned to 2.1 million, and by 1910 it had doubled again, to 4.4 million. In 1919, Upton Sinclair dubbed these people “white collar” workers.[5] Often trained as civil and mechanical engineers, they tracked the burgeoning commerce through numerical information.[6] New machines aided them: the mid-1870s saw the development of the typewriter and, soon after, carbon paper; the cash register, invented in 1882 to prevent theft, could collect sales data by 1884. Modern adding machines and calculators emerged in the later half of the decade and, in the 1890s, the mimeograph made possible the production of copies by the hundred.

The result transformed the city. Commuter rail allowed white collar workers to live outside the downtown business districts and, as industry came to rely more and more on rail for shipment, production left the increasingly congested core for the periphery, where it was based in buildings that, for fireproofing purposes, were physically separated from each other. The telephone tied building to building, and linked the rapidly spreading city to its hub. Understanding that the phone was reshaping the city, phone companies and municipalities worked closely together, the former relying for their network expansion on zoning plans legislated by the latter.[7]

Eventually, Bell’s company came to dominate the telephone system, while Western Union controlled the telegraph. Initially, however, this relative equilibrium in the industry was far from certain. Between 1877 and 1879, Western Union had begun to diversify from telegraph services by producing telephones based on alternative designs by Thomas Edison and Elisha Gray. Bell filed a lawsuit claiming patent infringement, and an out-of-court settlement left him in possession of a national monopoly. Opportunities for competition arose again when Bell’s patents expired in 1893 and 1894, and thousands of independent phone companies arose, serving rural hinterlands where Bell did not want to go. But, by refusing to connect his lines to these independents, Bell ensured that long distance service—a luxury feature—was available to his subscribers only.[8]

This desire to eliminate competitors was not universally appreciated in the Progressive era. In 1910, Bell’s company, which had taken the name Atlantic Telephone and Telegraph in 1900, purchased the larger and better-known Western Union. This move stimulated anti-corporate sentiment, and the risk of governmental antitrust action loomed—a far from idle threat given the 1911 breakup of the Standard Oil Company. In late 1913, AT&T took preemptive measures, in the form of a document called the Kingsbury Commitment. The giant agreed to sell off Western Union, and to permit the independents access to its lines. Over the next decade, a partnership evolved between AT&T and the government, with an understanding that in exchange for near-monopoly status, the company would deliver universal access to the public by building a network in outlying areas. AT&T thus avoided antitrust legislation to emerge in total control not only of the long-distance lines but, through its twenty-two regional Bell operating companies, of virtually every significant urban area in the country. AT&T owned everything from the interstate infrastructure to the wiring and equipment in subscribers’ homes.[9]

This early period established a topology of communications that existed until the Bell Systems’ breakup in 1984. Individual phones were connected to exchanges at the company office (to this day, one’s distance from the company office determines the maximum speed of one’s DSL connection) and these exchanges connected to a central switching station inevitably located in the city core, where the greatest density of telephones would be found. In 1911, the same year that Bell bought Western Union, General George Owen Squier—then head of Army’s Signal Corps, and the future founder of Muzak Corporation—developed a technology called multiplexy. By modulating the frequency of the signals so that they would not interfere with each other, multiplexy permitted transmission of multiple, simultaneous messages over one cable. Multiplexed connections were used on long distance lines beginning in 1918.[10] After World War II, however, the high cost of copper wire comprising the multiplexed network—coupled with rising demand for bandwidth and growing fear that nuclear war would wreak havoc on continuous wire connections—led engineers to develop microwave transmission for long distances. In the 1950s and 1960s, adopting the motto “Communications is the foundation of democracy,” AT&T touted its microwave “Long Lines” network as a crucial defense in the Cold War. Then, in 1962, AT&T launched Telstar, the world’s first commercial communications satellite, which they hoped would allow them to provide a 99.9 percent connection between any two points on the earth at any time, while further increasing communications survivability after atomic war.[11] Ironically, Telstar operated only six months instead of a planned two years, succumbing to radiation from Starfish I, a high-altitude nuclear test conducted by the United States Army the day before Telstar’s launch.

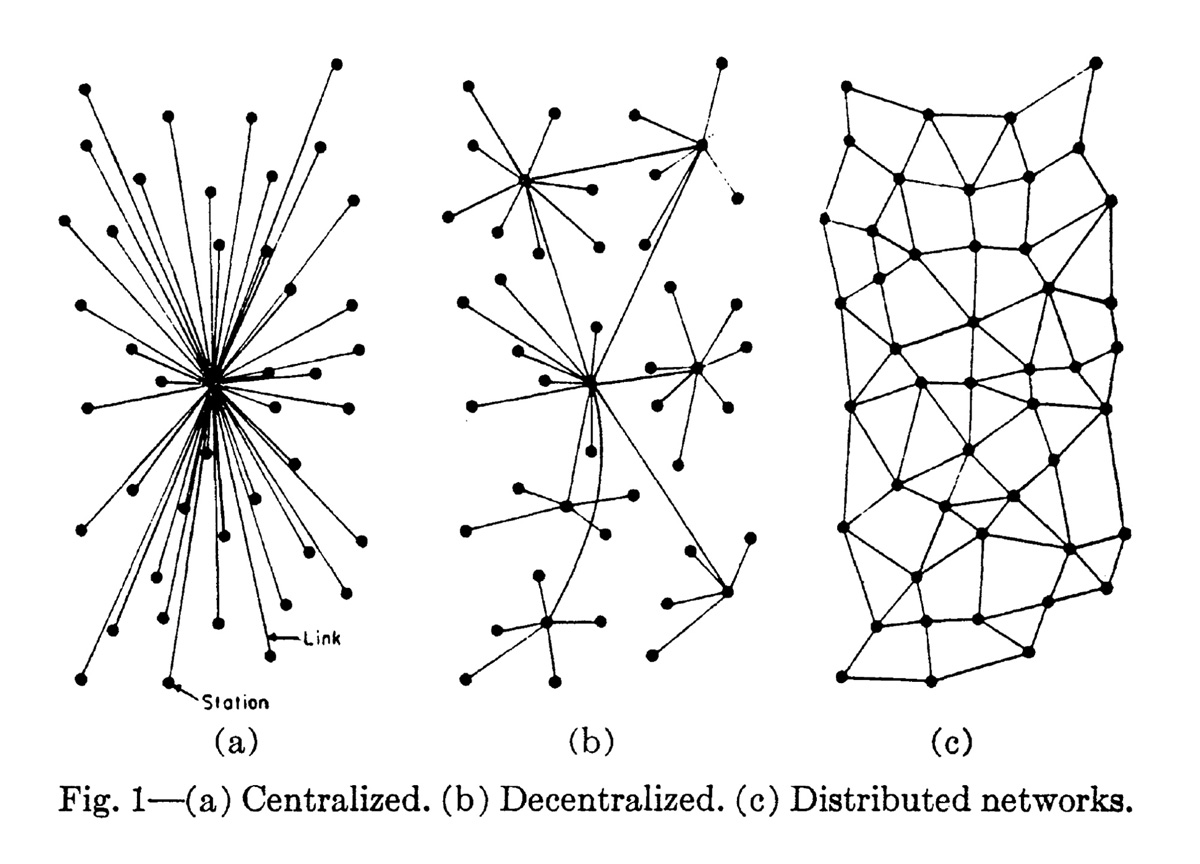

Even with the development of the microwave transmission system and the hardening of key buildings against atomic attack, the vulnerability of satellites to enemy destruction remained an open question. American computer scientist Paul A. Baran, a researcher at the Cold-War think-tank the RAND Corporation, felt that continued use of the centralized model of communications left the country vulnerable to extreme disruption during a nuclear first strike. With the loss of the city center and the destruction of the central switching station, Baran realized, all intercity communications would be destroyed.

The popular idea of the Internet as a centerless, distributed system stems from Baran’s eleven-volume proposal for a military network that could survive a nuclear first strike and maintain the centralized, top-down chain of command. Such a system was essential, Baran felt, so that the other alternative—giving individual field commanders authority over nuclear weapons—would not be necessary.

Baran proposed a new military network for telephone and data communications to be located entirely outside of strategic targets such as city cores. He identified three forms of networks: centralized, decentralized, and distributed. In the centralized network, with the loss of the center, all communications cease. Decentralized networks, with many nodes, are slightly better, but are still vulnerable to MIRV (Multiple Independently-targeted Reentry Vehicle) warheads. Baran’s network would be distributed and hard to kill: each point would function as a node and central functions would be dispersed equally.

Designed not for present efficiency but for future survivability even after heavy damage during nuclear war, Baran’s system broke messages down into discrete “packets” and routed them on redundant paths to their destinations. Errors were not avoided but rather expected. This system had the advantage of allowing individual sections of messages to be rerouted or even retransmitted when necessary and, as computers tend to communicate to each other in short bursts, would also take advantage of slowdowns and gaps in communication to optimize load on the lines. Baran’s model, however, was never realized. Baran’s proposal itself fell victim to a military bureaucracy unable to see its virtues.[12]

Instead, the Internet as we know it is the outgrowth of ARPANET, another military project that produced the first successful intercity data network. Established in 1958 to ensure US scientific superiority after the launch of Sputnik, the Department of Defense’s Advanced Research Projects Agency was implanted in universities throughout the country. ARPANET was designed to overcome isolation between these geographically separated offices, without undoing the wider range of possibilities created by diversity in location. Initially, the focus was on data-sharing and load-sharing. (The latter was facilitated by the range of continental time zones: as one technician slept, a colleague in another time zone would take advantage of otherwise idle equipment.) Few experts thought communication could become a significant use of the data network, and when email was introduced, in 1972, it was only as a means of coordinating seemingly more important tasks. ARPANET’s internal structure was a hybrid between distributed and decentralized. But, as it leased telephone lines from AT&T, its real, physical structure could not overcome the dominance of metropolitan centralization.[13]

With computer networks like ARPANET proliferating, researchers developed an internetworking system to pass information back and forth. First tested in 1977 and dubbed the Internet, this single system is the foundation for the global telecommunicational system we know today. During the 1980s, the Internet opened to non-military sites through the National Science Foundation’s NSFNet, a nationwide network that connected supercomputing sites at major universities through a high-capacity national “backbone.” Through the backbone, university-driven computing super-centers, such as Ithaca, New York, and Champaign-Urbana, Illinois, became as wired as any big city. To counter this dominance, the NSFNet made these universities, and eventually other non-profit institutions as well, act as centers of regional networks. Again, Baran’s distributed network was rejected, replaced by a decentralized model in which—even if there is no national center for the Internet—local topologies are centralized in command-and-control hubs.

The NSFNet grew swiftly while the ARPANET became obsolete, its dedicated lines running at 56 kilobits per second—as fast as today’s modems. In 1988 and 1989, ARPANET transferred entirely to the NSFNet, ending military control over the Internet. As a government-run entity, the backbone was still restricted from carrying commercial traffic. In 1991, however, new service providers teamed up to form a Commercial Internet Exchange for carrying traffic over privately owned long-haul networks. With network traffic and technology continuing to grow, in 1995 the government ended the operation of the NSFNet backbone, and operation of the Internet was privatized.

With the exponential growth of the Internet following privatization, its tendency toward centralization on the local or regional level continues. The commercial Internet has followed the money, thereby reinforcing the existing system of networking.[14] The Internet and telephone system are inextricably tied together today: not only are analog modems and DSL connections run over telephone lines, but faster T1 and T3 lines are, also, simply dedicated phone lines. To understand how today’s Internet is built, then, we need to turn back to the telephone system.

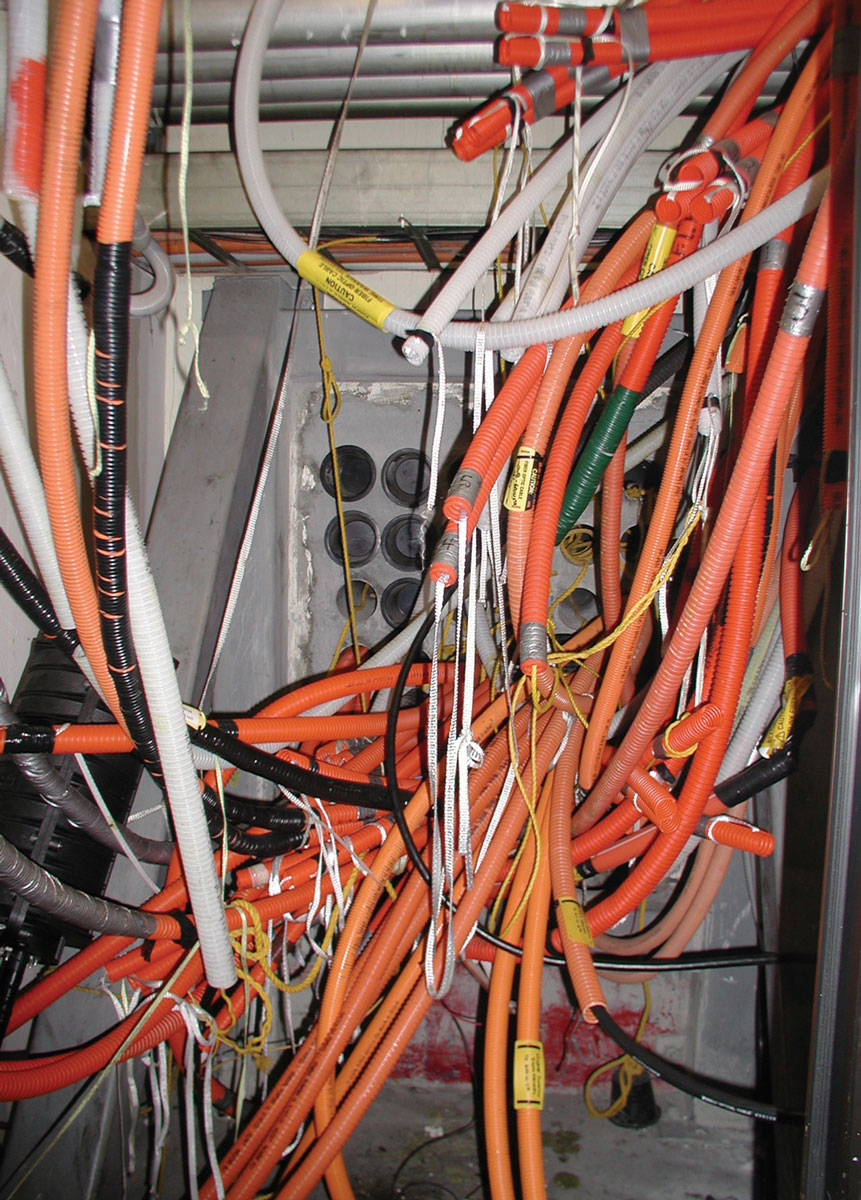

AT&T’s breakup into the Baby Bells in 1984—together with subsequent legislation further deregulating the industry—triggered competition at every level. But it did not fundamentally change the centralization of telephone service. As before, the key for long distance carriers was interface with the local system at the central office. But the central office was now controlled by whichever Baby Bell provided regional service. By 1990, fiber optics had surpassed satellite technology as a means of intercontinental communication, and had even begun to challenge the dominance of microwave towers. AT&T’s vast Cold War project was forced into obsolescence, and the company auctioned its “Long Lines” system to cell phone carriers seeking sites for towers. But fiber is expensive, and cost-effectiveness dictates that it is more difficult to create new pathways than it is to follow existing infrastructural routes and right-of-way easements: hence the fiber optic cable in the train tunnel in Baltimore.

Within cities, lines concentrate in carrier “hotels,” otherwise known as telco or telecom hotels. The history of the carrier hotel at the One Wilshire tower in Los Angeles is an example of the current system. In Los Angeles, the central switching station, now owned by SBC, is at 400 S. Grand, downtown. Although competing carriers are, by law, allowed access to the lines at the central switching station, SBC does not have to provide them with space for their equipment. Over a decade ago, in order to house their competing long-distance lines in close proximity to the 400 S. Grand station, MCI—which had its own nationwide microwave network—mounted a rooftop microwave station on One Wilshire, which is only three thousand feet from the central switching station and was at the time one of the tallest buildings downtown. With One Wilshire providing a competitor-friendly environment, long-distance carriers, ISPs, and other networking companies began to lay fiber to the structure. While the microwave towers on top have dwindled in importance—they are now used by Verizon for connection to its cellphone network—the vast amount of underground fiber running out of One Wilshire allows companies many possibilities to interconnect. These attractions allow One Wilshire’s management to charge the highest per-square-foot rents on the North American continent.

Such centralization defies predictions that the Internet and new technologies will undo cities or initiate a new era of dispersion. The historical role that telecommunications has played in shaping the American city demonstrates that, although new technologies have made possible the increasing sprawl of the city since the late nineteenth century, they have also concentrated urban density. Today, low-and medium-bandwidth connections allow employees to live and work far from their offices and for offices to disperse into cheaper land on the periphery. At the same time, however, telecommunications technology and strategic resources continue to concentrate in urban cores that increasingly take the form of megacities, which act as command points in the world economy. In these sites, uneven development will be the rule, as the invisible city below determines construction above. In telecom terms, a fiber-bereft desert can easily lie just a mile from One Wilshire.[15]

Moreover, the Internet’s failure to adopt Paul Baran’s model of the truly dispersed system means that it continues to remain vulnerable to events like the Baltimore tunnel fire. If Al Qaeda had targeted telecom hubs in New York at 60 Hudson Street or at the AT&T Long Lines Building at 33 Thomas Street, or had taken down One Wilshire, the toll in life would have been far smaller. Carrier hotels have few occupants. But the lasting economic effect, both locally and globally, might have been worse. Losing a major carrier hotel or a central switching station could result in the loss of all copper-wire and most cellular telephone service in a city, as well as the loss of 911 emergency services, Internet access, and most corporate networks. Given that many carrier hotels on the coasts are also key nodes in intercontinental telephone and data traffic, losing these structures could disrupt communications that we depend on worldwide.

The Net may appear to live up to Arthur C. Clarke’s idea of a technology so advanced that it is indistinguishable from magic. But whenever we see magic we should be on guard, for there is always a precarious reality undergirding the illusion.

- Anton A. Huurdeman, The Worldwide History of Telecommunications (Hoboken, NJ: J. Wiley, 2003), p. 37.

- Ibid., pp. 55–61. Also see Tom Standage, The Victorian Internet: The Remarkable Story of the Telegraph and the Nineteenth Century’s On-Line Pioneers (New York: Walker and Co., 1998), pp. 22–56.

- Standage, The Victorian Internet, pp. 196–200. See also Claude S. Fischer, America Calling: A Social History of the Telephone to 1940 (Berkeley: University of California Press, 1992), pp. 33–37.

- Alfred D. Chandler, Jr. “The Information Age in Historical Perspective: An Introduction,” in Alfred D. Chandler, Jr. and James W. Cortada, eds., A Nation Transformed: How Information Has Shaped The United States from Colonial Times to the Present (New York: Oxford University Press, 2000), p. 18.

- Donald Albrecht, Chrysanthe B. Broikos, and National Building Museum, On The Job: Design and the American Office (New York: Princeton Architectural Press, 2000), p. 18.

- On systematic management, see JoAnne Yates, “Business Use of Information and Technology During the Information Age,” in Chandler and Cortada, A Nation Transformed, pp. 107–135.

- See John Stilgoe, Metropolitan Corridor (New Haven, CT: Yale University Press, 1985) and Robert Fogelson, Downtown: Its Rise and Fall, 1880–1950 (New Haven, CT: Yale University Press, 2001).

- George P. Oslin, The Story of Telecommunications (Macon, GA: Mercer University Press, 1992), pp. 220–231.

- Fischer, America Calling, pp. 37–59.

- Huurdeman, Worldwide History, pp. 334–335.

- Oslin, The Story of Telecommunications, pp. 341–357. A number of websites chronicling the activities of the AT&T long lines division exist. Among the best are drgibson.com/towers; long-lines.net; groups.yahoo.com/group/coldwarcomms [Link defunct—Eds.]; and beatriceco.com/bti/porticus/bell/bell.htm. All accessed 30 July 2012.

- See Janet Abbate, Inventing the Internet (Cambridge, MA: The MIT Press, 1999), pp. 7–21.

- Ibid., pp. 113–145.

- Ibid., pp. 191–205.

- On the role of telecommunications in the rise of the Megacity, see Manuel Castells, The Rise of the Network Society (London: Blackwell, 2000), 2nd ed.; Stephen Graham and Simon Marvin, Telecommunications and the City (London: Routledge, 1996); and Saskia Sassen, The Global City: New York, London, Tokyo (Princeton, NJ: Princeton University Press, 1991).

Kazys Varnelis (www.varnelis.net) is currently teaching the history and theory of architecture at the University of Pennsylvania. He is president of the Los Angeles Forum for Architecture and Urban Design (www.laforum.org) and a founding principal of the non-profit architectural collective AUDC (www.audc.org).

Spotted an error? Email us at corrections at cabinetmagazine dot org.

If you’ve enjoyed the free articles that we offer on our site, please consider subscribing to our nonprofit magazine. You get twelve online issues and unlimited access to all our archives.