Things That Think: An Interview with Nicholas Gessler

The shape of pre-silicon computing

Margaret Wertheim and Nicholas Gessler

As we move into the age of “ubiquitous computing,” information appears to be losing its materiality. Computation is increasingly hidden on microchips and sealed in plastic beyond the sizzling screens of our laptops, behind the stylish skins of our appliances, and under the hoods of our automobiles. “It’s a pleasure to be seduced by these sleek machines,” says computer collector Nick Gessler, “but looking under their thin veneers is a good sanity check.” Before the invention of the microchip, both computational processors and the memory devices on which they stored data were tangible visible objects. Gessler has amassed an armada of “things that think,” a collection that reminds us that information is literally informed and must always be physically embodied. His archive includes a nineteenth-century Jacquard loom; a still-working system of Danny Hillis’s legendary supercomputer, the Connection Machine; punch cards used for weaving patterns in fabric; lacy nets of “core” memory; sculptural modules of “cam” memory; and myriad cryptographic devices. When not trawling through aerospace industry surplus, Gessler is a researcher at UCLA in the emerging field of artificial culture, which extends work begun with distributed artificial intelligence and artificial life to large-scale modeling of social and cultural systems. In 2003, he co-founded the Human Complex Systems Program in UCLA’s Social Sciences Division. Originally trained as a traditional anthropologist, Gessler was formerly director of the Queen Charlotte Islands Museum of indigenous culture in Canada. In December, he gave a show-and-tell talk about embodied computation at the Institute For Figuring in Los Angeles. On the eve of that event, he showed IFF director Margaret Wertheim a portion of his extensive collection at his home in the Santa Monica Mountains.

Cabinet: When did your interest in computational devices begin?

Nicholas Gessler: I started my university career in the mid-1960s in engineering. There was a computing club at UCLA, so my first experience with programming was on an IBM 1620 mainframe and I guess we did what most people did when they were beginning computing then—we wrote programs to draw pictures on typewriters with little typewriter elements.

But you didn’t go on to study computing; you went on to do anthropology instead.

I was always interested in the evolution of culture. My parents had been very interested in pre-Columbian and Peruvian antiquities and I was raised in a kind of museum environment and longed for that sense of discovering old technologies. After a couple of years, I transferred from engineering to anthropology and archaeology. I ultimately did my own research in British Columbia on an early Haida Indian village on the Queen Charlotte Islands. I was interested in cultural change as reflected in technological items. When I returned to UCLA in the mid-1990s to finish a doctorate, I became interested in the field of artificial life and evolutionary computing. Essentially what I do now is the same thing I was doing as an anthropologist—trying to take ideas in the social sciences and make them more scientific and to translate these into complex computational terms.

As an archaeologist you were exploring the history of other cultures, now you are excavating the early history of computing. What is the link you see here?

In doing archaeology, I was trying to come to grips with the cultural changes in the Pacific Northwest. A lot of this was brought about by technological change. In teaching artificial culture, I try to get my students to think about how they might describe social processes in computational terms. Ninety percent of my students have never had any programming experience and many of them regard a computer as a mysterious device sitting on their desktop. I really like to impress on them the fact that these items didn’t appear from nowhere. They also have a history. That is essentially how I got into collecting “things that think”—to borrow a phrase that originated at the MIT Media Lab.

Today we take massive amounts of computer memory for granted—we’re talking about terabytes on our desktops. What you’re collecting are pre-microchip devices, right?

Actually, I collect microchips as well, but it’s a little hard to go inside a microchip and show somebody what’s happening. If you have a microscope handy, you can do that, but it’s much easier to work with older material where you can see how the operations are proceeding physically.

Can you talk about some of the early kinds of computational devices?

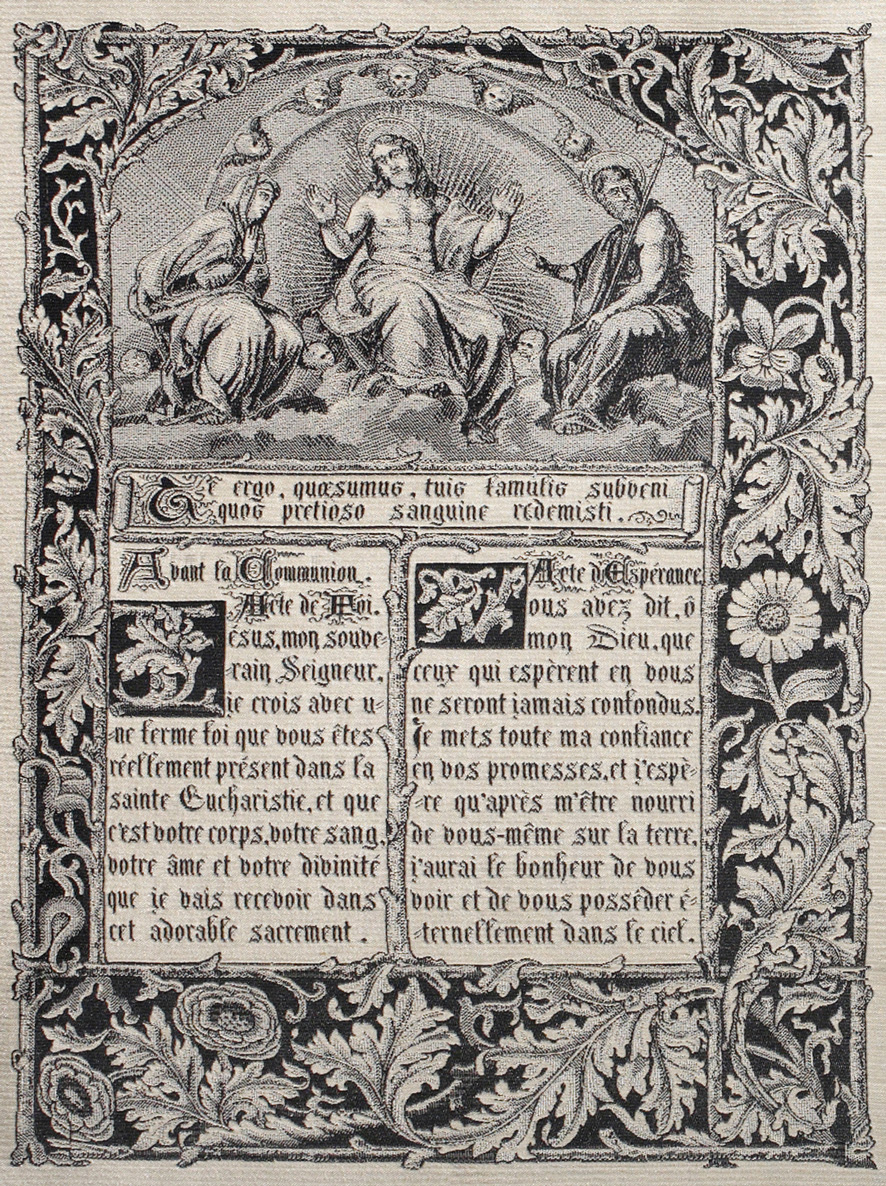

You can go way, way back, but people tend to start with the Jacquard loom as a significant device in the origins of computing. Joseph Jacquard (1752–1834) was interested in improving looms in order to create complex weaving patterns. Prior to this, there was a drawboy who knew which warp-threads to lift to produce a given pattern. The drawboy was an actual human being who sat atop the loom and pulled the strings as a puppeteer might. Before Jacquard, there were some technological enhancements to this job, but eventually the drawboy was replaced by a series of mechanical devices. Jacquard took a number of existing inventions and perfected them to such an extent that he essentially revolutionized the weaving industry. Initially, French weavers destroyed the looms because they feared unemployment, but then the French government took over the invention and Jacquard was given a royalty on every loom sold. His personal contribution consists of two innovations: the one that is probably the most important is the use of the punch card, although he did not really invent that either. You might say he perfected it. The punch card stores information on which group of warp threads to raise with each passage of the shuttle, and a series of these cards were laced together in a long chain.

Philosophically, what Jacquard accomplished was to separate the work of weaving from the information of weaving. In a sense, it was the beginning of a long process of information losing its body. In this case, the information was stored on a very light card and the mechanical device that read the cards did so very carefully. From there, the power was amplified from the card-reading needles to huge machinery that pulled the warp threads into position. It may ultimately have taken several hundred pounds of force to lift all the threads. You can say that Jacquard found a way to literally leverage information.

How did punch cards make the transition from weaving into computers?

Jacquard’s invention so impressed Charles Babbage (1791–1871), who is often regarded as the father of computing, that Babbage incorporated punch cards for storing information on his Analytical Engine. Babbage also greatly prized a silk portrait of Jacquard that had been woven in his memory. Actually, Jacquard used two sets of cards for his equipment and in the weaving industry you often see early images of looms with two sets of punch cards—one to store information about a particular pattern and one to control other repetitive processes in the weaving. The punch card has survived through to the present day in a number of forms.

What happened after Jacquard?

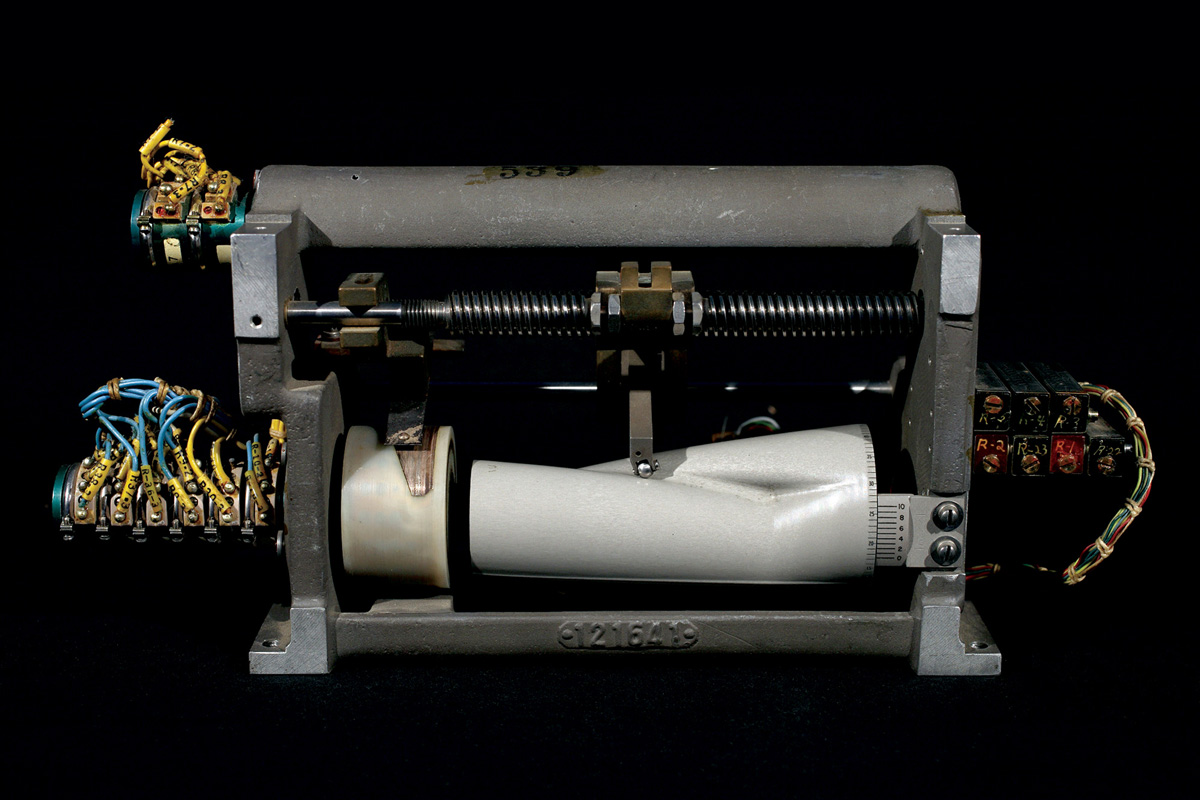

Another place where memory storage was needed was for entertainment purposes: the cylinders and punched disks that operate music boxes, and also the punched paper rolls in player pianos. There was an almost infinite variety of these devices, including some automata programmed by large wooden cylinders with metal pins. I’ve collected some things along these lines but I’ve been more interested in devices directly associated with computing. One very interesting technology that I found recently is cam memory. One example that I really like contains information on how to adjust from the local compass reading anywhere on the earth’s surface to true magnetic north. The memory storage unit consists of a very lopsided cylinder of aluminum that is actually a map of the earth. At first when I saw it I thought the device had been smashed, but when you look at it closely, you realize it’s been carefully machined. There’s a stylus that can be run along the axis of the cylinder, much like a phonograph needle, that reads the radius at any point around the surface. It’s this radius that encodes the deviation of the local magnetic field. It’s quite a beautiful object. There were also computational devices in aircraft navigation systems—you can still occasionally find these in electronics swap meets and junk yards—that have ball disc and roller integrators, which were able to mechanically monitor a plane’s direction and speed and translate that into latitude and longitude. Those are pretty amazing, and I’m sure were extremely costly to produce. Of course, you can do all that now with a few lines of computer code.

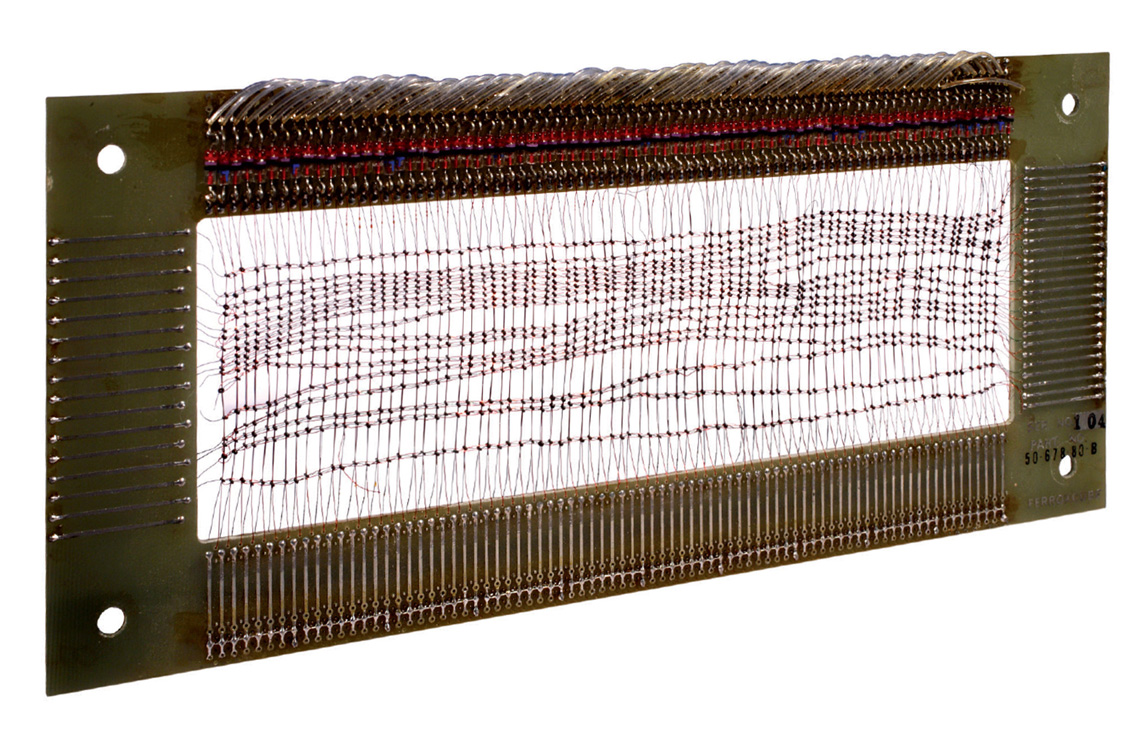

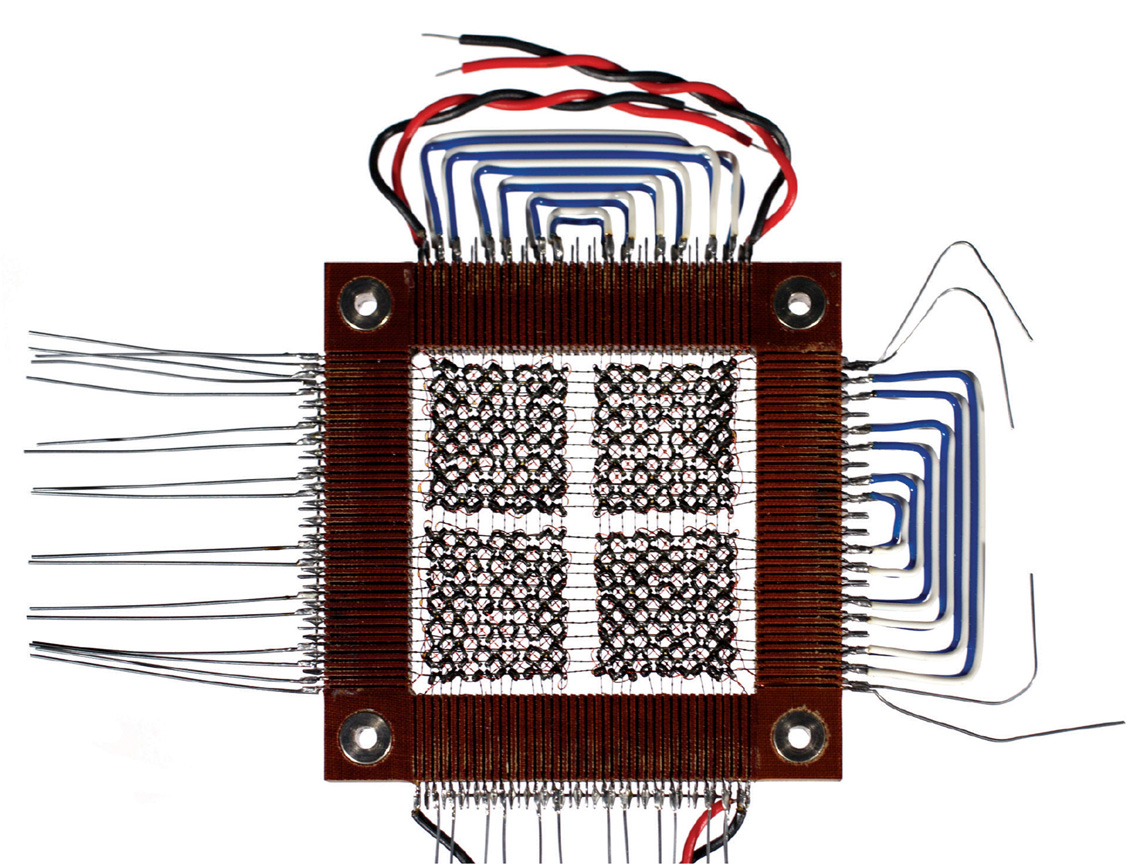

One kind of pre-silicon memory I have always loved is core memory.

Today, when people talk about core memory, they usually mean the main random access memory of a computer, but originally the term meant devices built from things called “cores,” which are little ferromagnetic beads or rings strung on a lattice of wires. You can envision a window screen with a bead at every crossing of two wires. The idea was that this could comprise a read-write memory—if you induced an electric current in a particular x wire and y wire, those two currents would add together and where they crossed it would produce enough current in the bead at that intersection to change its magnetic polarity. All these cores were hand-strung, like beading. No one could ever figure out a way to produce it mechanically, so they were handmade at great expense, mostly by women in factories in Asia. They are still used in aerospace and military applications because, unlike microchip memories, they are impervious to the electromagnetic pulse of nuclear weapons. They are also impervious to corruption by cosmic radiation, so you’ll find them in space vehicles, lunar landers, and aircraft. I have heard that NASA occasionally buys core memories on eBay, which I guess is cheaper than getting new ones.

Some of the core memories in your collection are so intricate—you can barely see the individual rings.

At the end, they became so small that they had to be assembled under a microscope. Still by hand! In general, the smaller the cores, the later it was made. All technologies are like that—eventually, they become so small you’re not really conscious of the system.

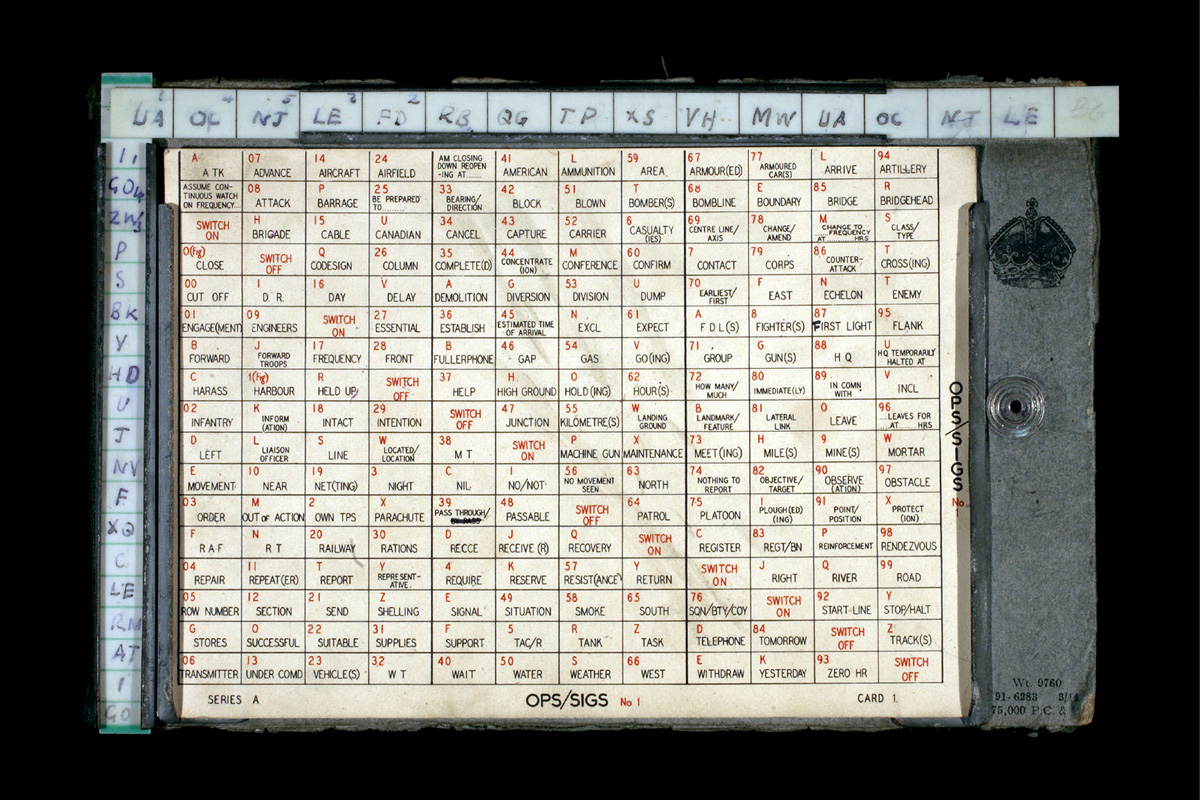

You also collect cryptographic devices. A lot of them seem to be based around a wheel that has some sort of alphabet written around the perimeter.

That’s what’s called a Jefferson Wheel. Thomas Jefferson was probably not the inventor, but he is credited as the originator. One such device was the M-94 used by the Signal Corps of the US Army. It was used for tactical communications and consists of twenty-five aluminum discs. Around the periphery of each disc is a random alphabet, and each disc has a different alphabet plus a designator or identifier on it. The discs are lettered from B to Z. In order to send a message between the two of us, you and I would have to agree that on a certain day we would set the discs in a certain order, which could be specified by the letter designator of the discs. In order to encode a message, you would rotate these discs one at a time until the original message (the plain text) appeared along one line. Then you’d rotate the whole device and pick another line of letters and send that as the encoded message. At the receiving end, the other person would arrange their M-94 to display this encoded message and then turn the whole thing around until they found a line that spelled out English (the original plain text message.) You’d send the message itself over radio by Morse code; this was just the system for doing the encoding.

Would there be a book that would tell them the order to arrange the discs on a particular day of the year?

Right. They would have a schedule that said, “On this day, we arrange the discs in this order, and then the next day a different order,” or week by week, or month by month. I’m not sure exactly what the agreement was. These were used up to the beginning of World War II.

It seems pretty complex to use.

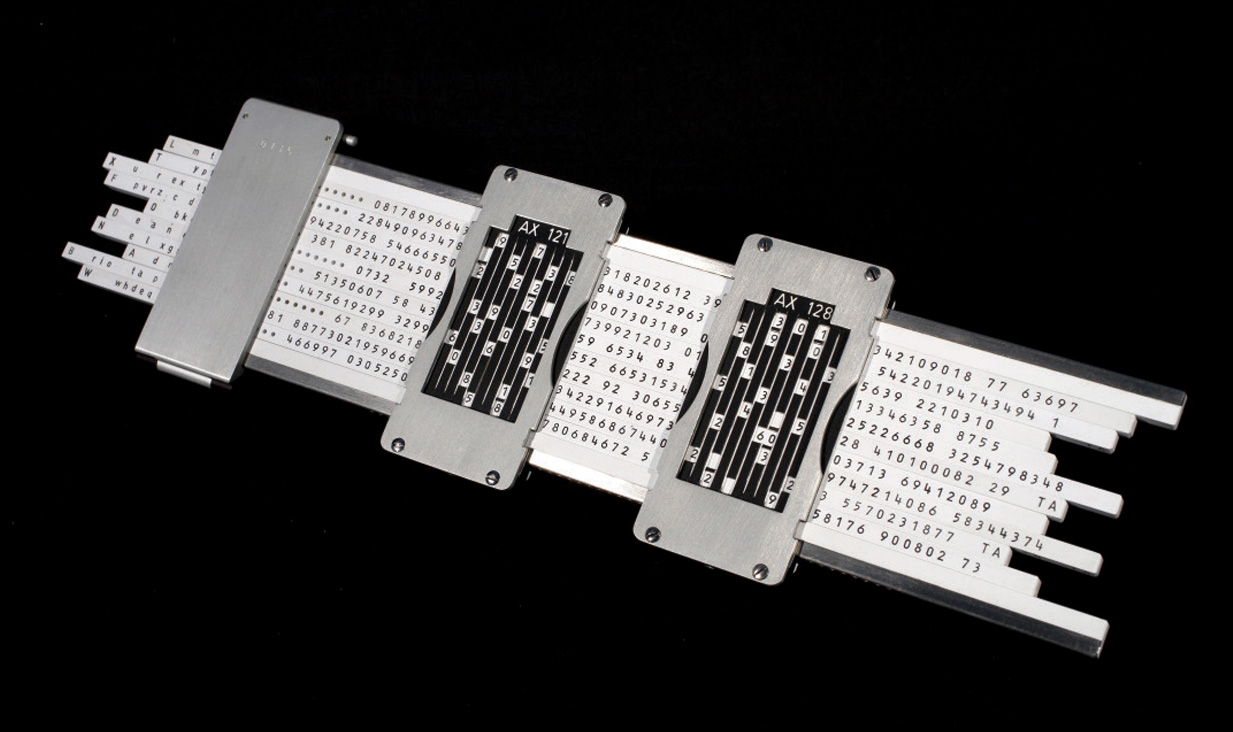

But they got even more complex. One device that’s pretty amazing is a handheld thing used by the West Germans for creating what’s called pseudo-random keys. It looks like a slide rule with a series of twenty-five sticks that have a square cross section, and each of these sticks has on each of its sides a random selection of numbers. You and I would agree on a selection of ten sticks, and put them in the slide rule in a certain order, each in a certain orientation and position. Periodically we would be sent a list of the correct settings as well as a new grille that served as a cursor. We would insert each stick the right way and read off the random key by going down the columns on the grille, turning it over and doing it again on the reverse side, then moving the cursor over. The whole thing was so cumbersome that nobody could use it in a field situation. If I were to open it up now to try and reset it, all the pieces would fall out, which I’m sure is exactly what happened to many people in the field. Wonderful device, but so complex it was impossible to use.

Today, we have software programs that can generate far more complex codes than these physical devices. Why do you think it’s important for people to understand the material history of computing?

It’s important to realize that the things on our desktops today are just the current instantiation of things that think. Like living organisms, they have ancestors. From an anthropological perspective, I think we have to understand culture and cognition not just in terms of how humans interact and pass information from one to another, but in terms of physical things as well. Edwin Hutchins talks about “distributed cultural cognition” in the sense that cognition, that is culture, is not just in our heads but also distributed technologically in the physical environment—in how a room is laid out, for example, and how people react in that room. Cognition is instantiated in artifacts, workplaces, architectures, and in the layout of cities—all these physical things are, in a sense, computing devices. This brings up the whole epistemological question of how we as cultures exchange information. I believe that in order to understand this, we have to look at our material environments, which is what motivates me in collecting these examples of distributed human cognition.

What you’re suggesting is that information is always embodied. Today, however, we seem to be evolving the fantasy that we can have a completely dematerialized infomatic realm, a total virtual reality. That’s the idea behind many cyber-fictions. Do you think that’s a delusion?

The only thing I can think of that’s disembodied information is information as pure energy—electromagnetic propagation, radio waves, light, and so forth. But even that always has some mass or energy carrier. Information cannot be disembodied in the sense that it loses any sort of carrier. One way to talk about this is a term I‘ve appropriated—“intermediation.” This expresses the idea that information is carried in different kinds of media, each with a life of its own. What’s important to understand is the way information is changed as it is transferred from one medium to another. I think it’s a challenge to social scientists and anthropologists to really work on a theory of intermediation, which for most practical purposes means understanding how information is modified in character as it is carried on different physical devices.

Nicholas Gessler is one of the executive directors of the Center for Human Complex Systems at the University of California Los Angeles.

Margaret Wertheim is director of the Los Angeles-based Institute for Figuring, an organization devoted to enhancing the public understanding of figures and figuring techniques. Also a science writer, she pens the “Quark Soup” column for the LA Weekly, and is currently working on a book about the role of imagination in theoretical physics. See www.theiff.org

Spotted an error? Email us at corrections at cabinetmagazine dot org.

If you’ve enjoyed the free articles that we offer on our site, please consider subscribing to our nonprofit magazine. You get twelve online issues and unlimited access to all our archives.